Cancer is a disease driven by mutation and copy-number aberration (CNA) yet most of the data generated to date has focused on SNV and InDel calling – the easy part of DNA analysis, particularly in ctDNA. This means that not enough focus has been put on copy-number calling. Prof Carlos Caldas’s group at CRUK-CI have a great paper out showing how well sWGS in FFPE breast cancer tissue can be used to call CNAs. The paper is well worth a read, the group show they can generate CNA data from virtually all of their archived FFPE samples using sWGS and irrespective of DNA quality – I’ll summarise some of the best bits here. It’s a great read and they’ve really shown how well FFPE CNV can be done using a low-cost and low-input method: Shallow whole genome sequencing for robust copy number profiling of formalin-fixed paraffin-embedded breast cancers.

A brief history from my personal perspective on sWGS for CNVs…in 2012 Peter van Loo (Twitter) gave a memorable talk at CRUK-CI where he showed CNV calling from 0.1x coverage – many people in the audience were sceptical. Also in 2012 we beta-tested Illumina’s first generation Nextera exome kit and had library left over from almost all samples – so we pooled them for sWGS and CNV calling; this led to our developing a workflow for exome CNV calling using sWGS of pre-capture libraries with the Caldas group (see Bruna et al). Also in 2013 we started a project that would lead to the first ctDNA exomes using Rubicon Genomics technology (see Murtaza et al). And in 2014 an excellent paper from Bauke Ylstra’s group at NETHERLANDS Twitter showed how well CNAs could be called from shallow whole genome sequencing (see Scheinin et al), they used data as low as 0.1x. And that’s the tool used in the Caldas group paper.

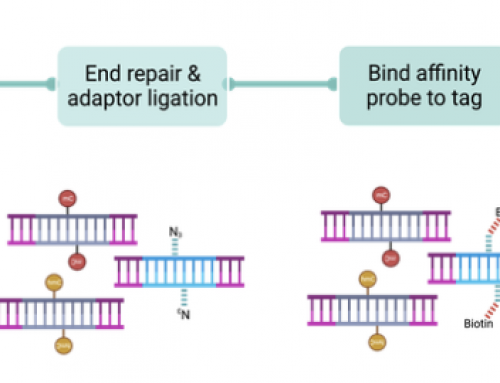

The Caldas group paper: The authors profiled 356 breast cancers from as little as 3.8ng DNA using Illumina FFPE TruSeq or the RubiconGenomics Thruplex DNASeq kits (now Takara). Analysis was with QDNAseq, which uses circular binary segmentation of aligned data in 100 kb windows and corrects for mappability and GC content.

The Rubicon kits which the authors used gave excellent results on low input DNA and they appear to compare very well to Illumina’s FFPE kit. The CNV profiles from the Illumina kit had less noise but had much higher DNA input (200 vs 50ng) and 10x higher sequencing depth (0.9x vs 0.08x). Downsampling to the same depth resulted in CNV calling that was pretty much the same across kit type, and increasing the sequencing depth from 0.08x to 0.15x in 23 Rubicon libraries improved data. Additionally, the authors reported that sequencing over 7 million reads provided very little in the way of additional noise reduction. These data show that sequencing depth is more important than DNA input but that there may be a maximum useful depth – so more samples is better than more sequences.

CNV calls were graded as Very Good (237), Good (142), Intermediate (47), Poor (12) or Fail (8) and there was no correlation with kit type (see Table 2 and Fig 4 images below). The lower quality sequencing libraries were not associated with quantity or quality of input DNA. The downsampling and increased sequencing depth experiments above suggested that lower quality libraries were more likely caused by loss of DNA during library preparation washes, or may have been caused by inaccurate library quantification (see Pre-pooling qPCR library quant at the end of this post).

In the paper the authors discussed reasons for failure of a small number of libraries. But before getting to that I want to remind you how many libraries were considered poor or failed – 3% & 2%. Amazingly of 446 libraries made 95% were considered intermediate or better and 85% were Good or Very Good. Of the 14 failures that could be repeated, either by making a new library (n=6) or by resequencing (n=8), only 2 could not be rescued. Increasing sequencing depth rescued some of the failed libraries that and this is probably why the authors suggest that pre-pooling quantification may be the reason for failure.

Summary: The paper is a very good demonstration of what’s possible from FFPE and is likely to encourage people to pick up the sWGS assay and start to dig into the impact of copy-number aberrations and variations in a tissue type where they’ve been a little ignored in the past. The small number of failures suggest we design experiments to be robust to drop-out, or we start replicating these sWGS data and combining data from two 0.1x runs to get the highest quality possible?

The Rubicon kit is likely to be a better bet in the lab as it required less input DNA and is easier to run, partly due to fewer processing steps and in the washes (Illumina requires 6 to Rubicon’s 1). And if you go by the quality of run data, at the 0.08x coverage for Rubicon, then you could run an additional 450 libraries with the sme sequencing costs as just 45 Illumina libraries at 0.1x! And you’d only have used ¼ of the DNA!!

Two things were missed opportunities for the authors, 1) they could have retested the pre-pooling qPCR quant to confirm their speculation that this was a cause of failure and 2) they mentioned the ability to use libraries prepped for sWGS for hyb-cap SNV and InDel analysis, “Whilst we haven’t performed target enrichment on our FFPE libraries”, it was such a shame they did not generate this data to add into the paper. Especially since sWGS generates much nicer CNV calls than exomes, and can generate genome-wide CNVs seven when combined with small panels (I really don’t understand why this has not become a standard practice).

PS: Pre-pooling qPCR library quant: This is an assay which has never really been good enough primarily because it relies on SYBR-based assays rather than TaqMan. SYBR estimates molecular count from intensity but relies on you having a good measure of fragment length (something most people don’t want to bother with), whereas TaqMan would count molecules directly. I blame Illumina entirely for this one as they knew it was an issue that could be fixed in the early days – in their defence that was when we had different adapters for single-end, paired-end and miRNA libraries so would have required development of three rather than one Quant kit.

In the Genomics Core the standard protocol is to run a singlicate qPCR for pre-pooling quant and triplicate qPCR for final library pre-sequencing quant. This is mainly due to scale, throughput and cost. When you make 96 libraries in a plate qPCR and run them in triplicate it burns through lots of qPCR reagents and time – and as the assay is pretty robust you don’t see a big gain in the quality of results. For replicated RNA-Seq experiments (the majority of the work we did/do in this lab) this really is not a problem. But for CNV calling where samples are not being replicated it causes drop-out (as seen in this paper).

Leave A Comment