The number of samples being run in sequencing projects seems is rapidly increasing. As groups move to running replicates (why on earth we didn’t do this from day one is a little mind boggling). Most experiments today are using some form of multiplexing, commonly by sequencing single or dual-index barcodes as separate reads. However there are other ways to crack this particular nut.

DNA Sudoku was a paper I thought very interesting and uses a combinatorial pooling that upon deconvolution identifies the individual a specific variant comes from. We used similar strategies for cDNA library screening using 3-dimensioal pooling of cDNA clones in 384 well plates.

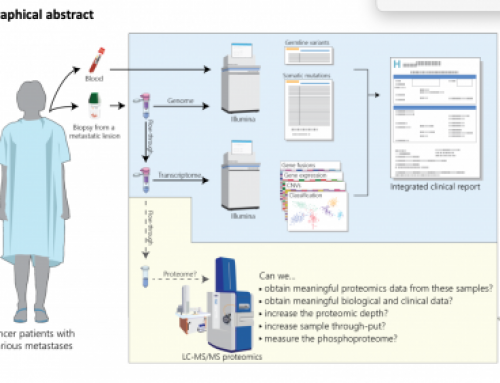

One of the challenges of next-gen is getting the barcodes onto the samples as early in the process as possible to reduce the number of libraries that ultimately get sequenced. If barcodes are added at ligation then every samples needs to be handled independently from DNA extraction, through QC, fragmentation and end repair. Ideally we would get barcodes on immediately after DNA extraction but how?

A paper in Bioinformatics addresses this problem very neatly, but in my view oversimplifies the technical challenges users might face in adopting their strategy. In this post I’ll outline their approach and address the biggest challenge in pooling (pipetting) and highlight a very nice instrument from Labcyte that could help if you have deep pockets!

Varying the amount of DNA from each individual in your pool: In Weighted pooling—practical and cost-effective techniques for pooled high-throughput sequencing David Golan, Yaniv Erlich and Saharon Rosset describe the problems that multiplexing can address, namely the ease and costs of NGS. They present a method that relies on pooling DNA from individuals at different starting concentrations and using the number of reads in the final NGS data to deconvolute the samples without resorting to adding barcodes. They argue that their weighted design strategy could be used as a cornerstone of whole-exome sequencing projects. That’s a pretty weighty statement!

The paper addresses some of the problems faced by pooling, in it they not e that the current modelling of NGS reads is not perfect, that a Poisson distribution is used where reads in actual NGS data sets are usually more dispersed but that this can be overcome by sequencing more deeply and that is pretty cheap to do.

There is a whole section in the paper (6.2) on “cost reduction due to pooling”. The two major costs they consider are genome-capture ($3000/samples) and sequencing (PE100 $2,200/lane). Pooling reduces the number of captures required but increases sequencing depth per post-capture library. They use a simple example where 1Mb is targeted in 1000 individuals.

In a normal project the 1000 library prep and captures would be performed and 333 post-capture libraries sequenced in each lane to get 30x coverage. The cost is $306,600 (1000×$300+3×$2200).

In a weighted pooling design with 185 pools of DNAs (at different starting concentrations) now only 185 library prep and captures would be performed but only 10 post-capture libraries are sequenced in each lane to get the same 30x coverage of each sample. The cost of the project drops to $96,200 (185×$300+18.5×$2200).

There is a trade-off as you can lose the ability call variants of MAF >4% but this should be OK if you are looking for rare variants in the first place.

Multiplexing can go wrong in the lab: We have seen multiplexed pools that are very unbalanced. Rather than nice 1:1 equimolar pooling the samples have been mixed poorly and are skewed. The best might give 25M reads and the worst 2.5M reads, and if you need 10M reads per sample then this will result in a lot of wasted sequencing.

Golan et al’s paper does not explicitly model pipetting error. This is a big hole in the paper from my perspective but one that should be easily filled. The major issues are pipetting error during quantification leading to poor estimation of pM concentration AND pipetting error during normalisation and/or pooling. This is where the Bioinformaticians need some help from us wet-lab folks as we have some idea as to how good or bad these processes are.

There are also some very nice robots that can simplify this process. One instrument in particular stands out for me and that is the Echo liquid handling platform, which uses acoustic energy to transfer 2.5nl droplets from source to destination plates. There are no tips, pin, or nozzles and zero contact with the samples. Even complex pools from un-normalised plates of 96 libraries could be quickly and robustly mixed in complex weighted designs. Unfortunately the instrument costs as much as a MiSeq, so expect to see one at Broad, Sanger, Wash U or BGI but not labs like mine.

Hi! This is a good read. You have such an interesting and informative page. I will be looking forward to visit your page again and for your other posts as well. Thank you for sharing your thoughts about multiplex assay in your area. I am glad to stop by your site and know more about multiplex assay. Keep it up!

Multiplex PCR for applications requiring the amplification or sequencing of DNA or RNA.

More recently new technologies have been introduced that allow the testing of multiple biomarkers in a single assay. Doing this with immunoassays is difficult and the level of cross-talk between individual assays within the multiplex are difficult to measure and often lead to significant variation in results between labs and over time.

Multiplex assay

How many samples can be multiplexes, reasonably?