We’re about to start a pilot work project with 10X Genomics so I put together an update to share internally and thought it would make a good post here too…I’ve been thinking about how 10X might be used in cancer and rare disease, as well as non-human genomes. The NIST results described below are particularly interesting as the same sample has been run on all of the major long-read technologies: 10X Genomics, Moleculo, CGI LFR, PacBio, BioNano Genomics and Oxford Nanopore; as well as both Illumina and SOLiD mate-pair. Enjoy.

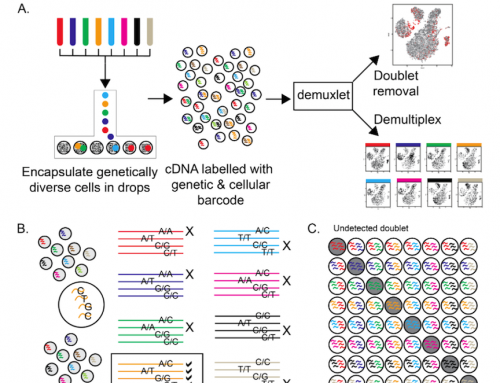

An overview/reminder of “Genome Partitioning” technology: The basic idea behind 10X (and similar genome partitioning methods) is to make a reasonably standard NGS library, but one that includes molecular barcodes (so you can tell which DNA molecule the reads come from), library prep is performed with such a small amount of DNA in each reaction that there is a very low probability that both maternal and paternal alleles will be present, multiple library preps are made simultaneously and combined to allow the whole genome to be sequenced to high coverage. After sequencing reads are aligned as normal to the genome, the reads can then be assigned to individual DNA molecules by their molecular barcodes.

10X Genomics “secret sauce” is mainly the number of partitions they create compared to other technologies (Moleculo), but also the amount of input DNA used, and the automation of the whole process. In the Moleculo approach a single DNA sample is distributed across a 384 well plate, and libraries are made with 384 molecular indexes. 10X Genomics use their proprietary gel-bead technology to create around 100,000 partitions; they have a library of over 750,000 molecular indexes, and use just 1ng of input DNA. Everything required to make the phased NGS library is included so you can go straight into WGS or the capture step of an exome-seq or similar.

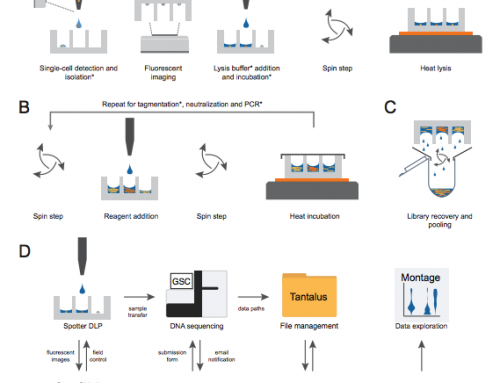

The partitions in 10X Genomics technology are droplets each containing about 5fg (0.1% of the genome) of DNA and the library prep mastermix, that also contain millions of copies of a single index oligo carried by the gel-bead. The gel-bead is a 54 um aqueous gel that dissolves to release its oligos into the mastermix:DNA solution. The oligos contain several features: Illumina’s P5 adapter, a 14bp molecular index (i5), the Read 1 priming site and an N-mer. The N-mer anneals with ssDNA and extends to create products of around 1000-1500bp, these products then enter a pretty standard Illumina library prep and after shearing the P7 primer is ligated ready for Illumina sequencing. i5 index is used as the molecular marker, i7 is used for sample barcoding.

Find out more about the tools 10X have built at software.10xgenomics.com. These include a BASIC pipeline for people not wanting to use a standard reference genome.

10X are also developing a barcode aware aligner.

A 10X Genomics prep is around $500 and the box is $75,000. Pretty good value considering the additional information gained. I’m still not clear on how much additional sequencing will be required to make the most of this technology but in some areas it may become a standard tool.

Generating FASTQ uses a different BCLtoFASTQ pipeline: The 10X Genomics bclprocessor pipeline takes the Illumina BCL output and demultiplexes using the i7 sample index read (index read 2), it generates FASTQs for each sequence read and also for the 14bp i5 molecular barcode. This is different to standard pipelines; it’s a dual index sequencing run but only the 2nd index is used for demux – how this might affect our pipelines is yet to be tested.

NIST data release: 10X Genomics released data from two NIST reference samples NA12878 and an Ashkenazi trio (get the data here). The N50 phase block was 12-20Mb with max of up to 40Mb (currently limited by software, due to be corrected very soon). In NA12878 the entire P arm of chromosome 10 was resolved to a single phase block (39.1Mb). The Ashkenazi trio shows a 50kb deletion very clearly in child and father, where the mother has two functional copies of the gene. Phasing was critical in understanding whether variants were on one or both copies of a gene. They identified two missense mutations 7.5 kb apart in MYPN, a gene associated with cardiomyopathy, but showed that both mutations were on the same copy, meaning there was still a functional copy in the sample. In another gene, MEFV, two variants were shown to be on different copies – if these had been deleterious the gene would have been knocked out.

Other published results: There is a paper describing the use of 10X (albeit briefly) from the labs of Richard Durbin​ and David van Heel​ on the BioRxiv: Health and population effects of rare gene knockouts in adult humans with related parents. The paper describes an analysis of Rare variant Homozygous likely Loss Of Function (rhLOF) calls in a large exome-sequenced cohort. The group suggest that rhLOF calls may require more cautious interpretation as they found many instances of rhLOF variants previously described as deleterious with no discernible affect, e.g. cases with G​JB2 or M​YO3A ​knockouts, both hearing loss risk variants, without any symptoms.

The 10X data were used to investigate ​meiotic recombination in the maternal gamete transmitted from a PRDM9 knockout mother (who should be infertile) to her child. The confirmation of a healthy and fertile P​RDM9​ knockout adult human suggests differences in the mechanisms used to control meiotic crossovers in different mammals.

Customer data: Several groups have generated data on the 10X platform and these results have been presented at various meeting s (AGBT, ASHG, etc).

Levi Garraway’s group have generated data showing the detection of the TMPRSS2-ERG fusion in the prostate cancer cell line VCaP. This was very clearly determined on 10X WGS, but the initial exome-seq (Agilent V5) results were less conclusive because the size of the TMPRSS2-ERG intronic deletion was similar to the max fragment length that 10X works with. Addition of intronic baits improved the results dramatically – this demonstrates one limitation of the technology, understanding these before diving in.

His group were also able to detect chromoplexy (first shown in their Cell 2013 paper) with the 10X linked reads: The translocated loci are “promiscuous”, ERG is found to be translocated with other genes e.g. GFOD2, which also pairs with CBFB, which also pairs with TMPRSS2. This was much clearer in the 10X data than the original short reads.

Hanlee Ji’s group (Stanford) have used 10X to determine that the focal amplification in a colorectal cancer sample is a tandem duplication. They have also demonstrated the use of the technology in low tumour purity analysis of gastric cancer – where they found 3 duplications and an FGFR2 amplificiation.

And one other group (can’t find the reference – apologies) described the use of 10X to resolve genes with high homology and make variant calls customer results in clinically relevant genes. The genes SMN1 and SMN2 are 99% homologous making alignment practically impossible with short-reads and coverge consequently tanks in the highest homology regions. However data from PacBio and 10X both capture variants demonstrating the utility of long-read technologies in the clinic.

Using 10X to determine tumour heterogeneity: An area I am eager to see develop is the use of 10X Genomics technology to resolve tumour heterogeneity from copy-number data. Tumour clones can vary significantly in copy-number aberrations. Phasing CNAs using low-coverage WGS and tools like a 10X aware qDNAseq might allow a much cleaer picture of heterogeneity to emerge. Of course a full genome analysis would be great but with some tumours containing multiple clones, with some at low-frequency, a very high-depth genome is going to be required. Low-coverage WGS is cost-effective and might be easier to apply in this context.

Rare-disease analysis may benefit from 10X Genomics technology: I do wonder how much of an impact 10X might have on rare-disease analysis outside of trios, or in extending the understanding of family structure. Each 10X genome could be considered an analysis of three – the individual and their parents as all genomes are resolved in a run.

How many rare disease cases this would benefit I have no idea. But I’d guess that in cases where one, or zero, parents are available 10X would add useful data. This is even more likely to be the case in adult onset rare-disease.

What about non-human genomes: So far almost all the data I’ve heard about is from Human sequencing. It would be trivial to use the technology, and probably the tools with default parameters, for non-human genomes – if they are of similar genome size e.g. 3Gb. For larger genomes more DNA will generally need to be added, but for complex genomes like Wheat it may well be better to do more runs per sample instead to keep the three genomes partitioned. For small genomes the amount of DNA can be dropped, or a carrier DNA could be added (and possibly be removed before sequencing by a simple restriction digest). I’m not sure but if you are working on really small genomes then it might be possible to mix multiple organisms together to achieve the required DNA input and allow the alignment to different genomes to sort everything out. Phased meta-genomics perhaps?

Hi Keith, thank you for the update on 10X.

Was at AGBT during their impressive launch, and I do have some questions about how useful this platform can be for structural variant detection / characterization for tumor samples. You rightly point out for a given large deletion it won't pick it up, and makes me wonder what other structural variants it would also miss. At ICG-10 Steven Turner (PacBio CEO) showed a slide directly comparing 10X data to PacBio's; not surprisingly it was on the order of 10 versus 17K (or something like that – I had to type on an iPhone screen for three days which was very unpleasant, but that's another story…)

Question for you – it is clear that phasing will be an important research tool, but not one for the clinic for a while to come; and if there is a need for phasing in discrete regions after discovery / characterization a long-range PCR followed by single-molecule sequencing would be the way to routinely assay for this. And a second question would be the expense of whole-exome phasing as a research tool limiting adoption ($500 sounds relatively inexpensive, but if you need 30% more sequencing your given exome cost/sample could triple). And at 3x a given price/exome, for the majority of the exome market they may just wait until the pricing comes down (either on 10X or long reads from either PacBio or ONT).

Would be interested to hear what you think. Thanks again for a nice write-up.

Dale

No problem Dale, but this is James's blog not Keiths!

I do think phasing could move reasonably quickly into the clinic, but as a niche test. A big impact could be in understanding mutations in tumour supressor and DNA repair genes where two mutations may tolerable if they are in phase, but if they knock out both copies would be deleterious. This is outside my area of expertise, but the technology is so cool I am excited by it.

(Facepalm) – I'm reading so much nowadays I'm forgetting where I am!

All the best – Dale

Hope this is true. Thus, in the end, less people will suffering from rare diseases including some types of cancers.